Camera sensor size is the most important factor in determining overall camera performance & image quality, given the optimal focus, f-stop, ISO, and shutter speed settings have already been obtained.

Below, you’ll learn:

- Why camera sensor size really matters.

- What camera settings produce the highest quality images, without noise, on a consistent basis.

- Basics of Bit Depth & Color Depth.

- How a camera sensor works.

Scroll down, access your free PDF & start learning!

Table of Contents

- Camera Sensor Size Supplemental Videos

- Camera Sensor & Image Quality Basics

- Megapixels & Color: How Camera Sensors Work

- Bit Depth, Color Depth & Image Quality

- Camera Sensor Size – Overview

- Full Frame, Medium Format & Crop Camera Sensors

- Camera Sensor Size – Why It Really Matters

- Picture Noise & Sensor Size

- Dynamic Range, ISO & Sensor Size

- Camera & Sensor Recommendations

- How to 10X Your Learning Speed

Camera Sensor Size Supplemental Videos

The following video supplements the learning material found in the guide below, making it much easier to visualize.

Becoming a histogram expert is critical to understanding why camera sensor size matters, in turn producing the best image quality.

Camera Sensor & Image Quality Basics

Understanding camera sensor size and why it actually matters is one of the most important aspects of learning photography.

Selecting the best overall camera settings (ISO, shutter speed, f-stop) and image quality attributes (dynamic range, noise, bit-depth, sensor size) is impossible without a basic understanding of how a camera sensor works.

What is a Camera Sensor?

The camera sensor, also known as an image sensor, is an electronic device that collects light information, consisting of color & intensity after it passes through the lens opening, known as the aperture.

Shutter speed defines the length of time this light information is collected by the camera sensor.

ISO determines the amplification the light information receives as it’s conveyed into the digital world, where it’s stored on a memory card as a picture file.

There are two popular types of image sensors, CMOS sensors (complementary metal-oxide semiconductor) & CCD sensors (charge-coupled device).

Due to the higher performance, especially in low light, and lower cost the CMOS Sensor is found in nearly all modern digital cameras.

CMOS Sensors are defined by their physical size ( surface area for capturing light information ) and the number of light information collecting pixels which make up this surface area.

What is a Pixel in Photography?

The camera sensor is a rectangular grid containing millions of tiny square pixels as shown in the graphic.

|

| Attribution – Wikipedia |

Pixels are buckets or wells for collecting & recording light information. They are the base unit of the image sensor.

Digital photography is the process of recording real world color and tones, from a scene or composition, using individual pixels.

Each individual square pixel represents a small sample of the image composition as a whole, consisting of a single color. No more.

The combination of millions of small pixels of varying colors creates the image as a whole.

Let’s call this collection of all pixels the sensor grid.

The “rainbow colored” rectangle on the graphic shows the sensor grid. Pixels are so small that it’s hard to see each individual unit.

Creating Photographs – Pixels, Explained

The goal if the following section is to help you conceptualize how pixels work.

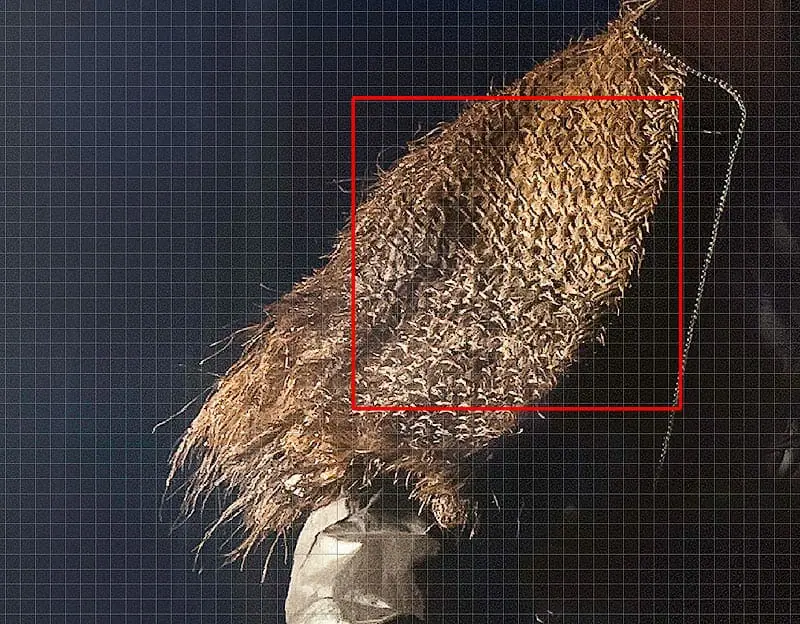

Imagine an image composition, seen through a camera viewfinder, with an imaginary overlaid grid, containing millions of tiny uniformly sized squares, as seen in the graphic below.

Pretend the following graphics are the real world scenes that you’re seeing through the camera viewfinder or on the back of your camera live view screen.

As we start to zoom in on the red box the squares become closer and closer to their actual size.

Finally, we zoom in so far that each individual square can be seen at its actual size.

These squares are so tiny that they contain no detail only a single color or tone, as shown below.

![]()

Take a look at different objects around you. If you look closely, under a large amount of magnification, everything becomes a single color on a very small scale.

The combination of all these small colored squares produces the scene or composition as a whole.

Let’s call this collection of imaginary small colored squares the image grid.

NOTE: This image was captured before twilight, in the pouring rain, on the Li River in China. This shooting scenario is the ultimate test for a camera sensor.

![]()

Each square pixel on the sensor grid corresponds to a tiny square on the imaginary image grid.

- The sensor grid is an actual object that collects light information about a scene, using pixels.

- The image grid is an imaginary object which breaks the real world scene or composition into millions of tiny squares.

- The pixel’s only job is to record the single specific color of each tiny imaginary square on the image grid.

Each pixel therefore only collects a single color corresponding to very small sample of the scene being photographed.

When the correct camera settings, shutter speed, ISO and f-stop, are selected, each pixel on the sensor grid will collect & record the exact color of the corresponding square on the image grid.

In turn, a digital image is produced, from millions of pixels, which matches the real world composition seen through the viewfinder.

In photography, this is known as the correct exposure.

When the incorrect camera settings are selected, the squares on the pixel grid don’t match the squares image grid, producing a digital image that doesn’t match the scene being photographed.

Digital photography is the process of recording real world color information represented by the image grid, and relaying it into the digital world represented by the pixel grid.

The photographer’s goal is to select the correct camera settings relaying this information with precision & accuracy, producing a digital image that matches what they see through the viewfinder.

The camera sensor, which contains the pixel grid, is the tool used to collect this information & perform the task.

If any part of this section was confusing, watch the video provided at the top of this page.

Megapixels & Color: How Camera Sensors Work

Cameras are rated by the number of total pixels their sensors contain.

Mega is the mathematical term denoting 10^6 also stated as “10 to the 6th power” which can be written as 1,000,000 or 1 million.

Megapixel, therefore, means 1 million pixels. This is a standard unit of measure in electronics.

- For example, a 36.6 Megapixel (36.6 million pixel) camera sensor could be 7360 pixels wide and 4912 pixels tall.

- Multiplying the 7360-pixel width by the 4912-pixel height provides the 36.6 million pixel sensor rating.

- Simply put, this would be a 7360-pixel wide by the 4912-pixel tall grid, containing a total of 36.6 million pixels.

More Megapixels doesn’t always equal better image quality!

Let’s discuss…

Pixels – Wells for Collecting Light Information

Light is made of photons or small packets for carrying light information. Photons are elementary particles which have no weight but carry information about light.

When photons collide or interact with certain materials, such as silicon CMOS image sensors, free electrons are released from the sensor material, producing a small electric charge. This is known as the Photoelectric effect.

The free electrons are collected and counted by individual pixels on the sensor grid. Each pixel well has a maximum capacity of electrons it can collect. This maximum is known as full well capacity.

A pixel can only display a single color, including black, white, greyscale, and RGB Color values. The color of each pixel is determined by the amount & kind of light information it collects.

Determining Pixel Color & Tone

The number of electrons each pixel well collects determines it’s brightness, also known as value, on a scale of black to white. The scale of black to white is known as the tonal range or tonal scale.

The brightness of each individual pixel, on a scale of black to white, is known as tonal value, or luminosity.

- The more electrons a pixel collects, the lighter it’s corresponding tonal value in the image.

- A white pixel contains the maximum amount of electrons.

- A black pixel contains no electrons.

- All values between maximum and minimum produce greyscale tonal values.

Electrons counts can’t determine specific color information, therefore, a color filter is placed over each pixel helping to determine it’s color. This is discussed in detail below.

By combining the tonal value & color filter information, the final color is determined for each pixel.

The graphic below shows the tonal range and an arbitrary number of electrons required to create each tonal value.

The goal is to visualize this concept. The number of electrons is made up & does not matter.

More Electrons Collected = Lighter Tonal Values = Lighter Pixels Displayed in Photo

![]()

For example, Pixel Well 1 collected 8 electrons producing a dark tonal value.

Pixel Well 2 collected 22 electrons producing a light tonal value.

Pixel Well 3 collected 13 electrons producing a mid-tone tonal value.

The number of electrons collected by each pixel well produces a corresponding tonal value for that pixel. This tonal value is displayed in the final photo, along with the color.

This information is relayed from the image sensor into the digital world using an electronic signal.

Digital Signal, Brightness & Tonal Value

Each electron produced during the Photon sensor collision carries a small electric charge. The more electrons a pixel collects, the more charge the pixel well contains. Electric charge is a physical measured value.

This charge is used to transfer the light information, collected by each pixel, into digital information which cameras & computers can understand.

Electronic signal communicates physical real world values into the digital world of binary code.

Each tonal value, on the scale of black to white, has a corresponding signal required to produce it. Specific signal levels produce specific tonal values. The more electrons a pixel collects the stronger the signal it creates.

Less Light = Less Electrons = Smaller Signal = Darker Tonal Value

More Light = More Electrons = Larger Signal = Lighter Tonal Value

![]()

When a pixel well fills to the top with electrons, creating the maximum signal, it’s corresponding tonal value is white, producing a white pixel in the photo.

Since the pixel is full it can no longer collect light information. This is known as a fully saturated pixel well.

In photography terms, this pixel is “clipped”, “blown out” or “overexposed”. Each term refers to the same concept.

None of the information the pixel collected prior to filling can be recovered or used in the final image. It’s gone forever!

When a pixel well contains no electrons it produces no signal. The corresponding tonal value is a black, producing a black pixel in the photo.

The tonal values produced by each signal are combined with collected color information to produce each pixel’s final color within the photo.

Color & Light in the Digital World

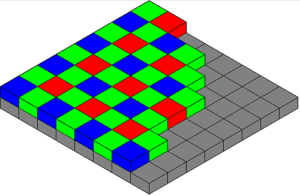

Since color information can’t be determined directly by the number of electrons in each pixel well, a color filter is placed over each pixel.

|

| Attribution: Wikipedia |

Most, but not all, CMOS sensors use a Bayer Filter which looks like a quilt of Red, Green, and Blue screens, with a single color screen covering each pixel as shown in the graphic.

Other color filter arrays, including the Bayer, are discussed in the Wikipedia link, below the graphic.

Each pixel is covered with a color filter, either red, green or blue. The color of each pixel is determined by the color of light (frequency of light wave) which passes through this filter.

The Bayer Filter is laid out with 50% Green, 25% Red, and 25% Blue pixel filters.

To the human eye, the perceived brightness of green is greater than that of red or blue, thus green filtered pixels are represented twice as often in the Bayer Filter.

Red light passes through the red filtered pixels, while green and blue light do not. Blue light passes through the blue filtered pixels, while red and green light do not. You get the gist…

Each pixel can only collect the primary color information of it’s assigned red, green or blue filter, along with the number of electrons collected in the pixel well, which determine tonal value.

Using this information, and a series of algorithms & interpolations, the camera can determine the color of each pixel contained on the sensor grid.

The precision and accuracy which this information is communicated and displayed in the final image is determined by the bit depth.

Bit Depth, Color Depth & Image Quality

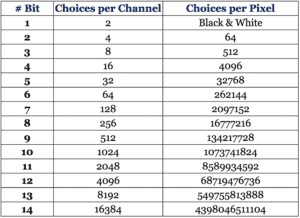

Bit depth specifies the number of unique color & tonal choices that are available to create an image. These color choices are denoted using a combination of zeros and ones, known as bits, which form binary code.

Bit depth is a rating system for a camera’s precision in communicating color and tonal values.

A Bit Depth Analogy

An adult and a 2-year-old child looking at the same landscape see close to the same thing, consisting of color and tonal values (light intensity).

The adult can describe this scene in vivid detail using a large number of descriptive words & complex vocabulary.

The 2-year-old child, seeing the same thing, has a hard time describing the scene accurately, having a limited vocabulary.

They both see and collect the same real world information, but one can describe it in vivid detail, while the other cannot.

Larger bit depth systems, like a larger vocabularies, provide better precision when communicating information.

How Bit Depth Works in Photography

After the exposure time, defined by shutter speed, has elapsed, the signal information produced by each pixel is processed & converted into a digital language known as binary code.

The digital language takes the form of zeros and ones ( bits ) & communicates the values of color ( Red, Green, Blue ) & tone collected by each pixel.

The pixel specific tonal value is determined from the number of electrons ( charge ) collected and the color is determined using the Bayer Filter.

The precision of the communication is rated on the scale of bit depth. Larger bit depth systems allow more precision in describing the information collected by each pixel.

Binary Code & Bit Depth, Explained

We are used to numerical systems with a base of 10, such as 10, 20, 30, 1000, 100000.

Bits use a base 2 numerical system also known as binary.

A 1-bit system only has two possible outcomes. 1 or 0, on or off, true or false, yes or no, black or white.

A 1-bit photo only has two possible pixel colors, black and white.

Consider this like a child that only speaks two words, yes & no, black & white. You wouldn’t depend on this child to communicate a landscape scene with a large degree of accuracy or precision.

As the bit depth of the system increases the combinations of different possible choices or outcomes also increases.

Calculating Bit Depth in Photography

A 2-bit system would contain 4 choices as follows, (0,0)(0,1)(1,0)(1,1).

A 2-bit photo would contain 4 possible choices, black, white, dark grey and light grey.

A 3-bit depth system would contain 8 possible choices or outcomes, ranging from (0,0,0) to (1,1,1).

For example, the number of possible choices for a 3-bit system is found by using the binary base 2 and raising it to the power of 3, 2^3 = 8.

A N-Bit System contains 2^N possible choices for communication.

In photography, the number of bits determines the possibilities of color or tone a single pixel can display, known as bit depth.

This doesn’t mean that each possibility will necessarily exist in a photo, but it could.

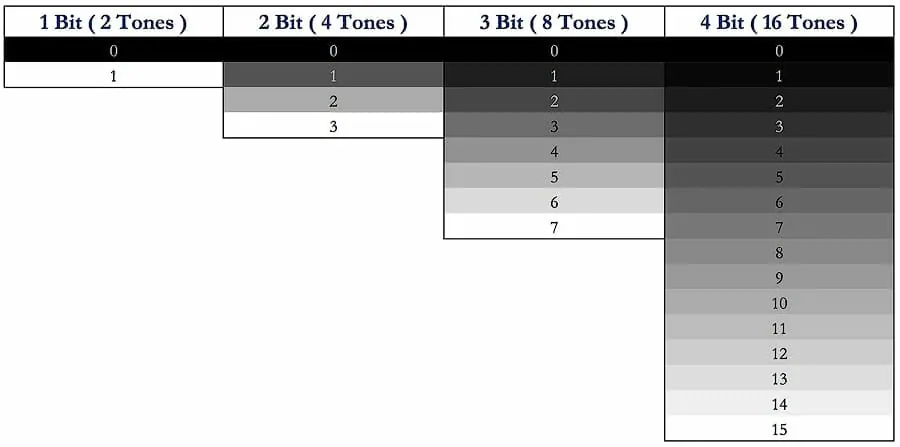

The example below shows the tonal values of black to white communicated with varying degrees of precision, by different bit depth systems.

The tonal range is the same for all bit depth systems, starting with black & ending in white.

The bit depth determines how many steps or possible choices within the tonal range can be communicated. Each step or possible choice is known as a bin. The more bins the more choices.

As shown in the graphic below, a 1-bit system can only communicate black and white. A 2-bit system can communicate black, white and two tones of gray.

The 3 & 4 bit systems provide a larger selection of choices used to communicate varying tonal values within the tonal range.

The change in the tones shown above is easily discernible to the human eye, which is unacceptable for photography.

Photographs must contain smooth transitions between color and tones, as seen in nature, producing realistic images.

As the bit depth of the system increases the degree of precision which information is communicated from the real world into the digital world also increases.

The graphic below shows an 8-bit system with 256 or ( 2^8 ) different bins. Due to the vast number of possible tonal choices the transition from one to the next isn’t discernible to the human eye. A JPEG image is 8-bit.

Color Channels & Color Depth

The example above was for black and white photographs only. Most digital cameras take color photographs.

These color photographs are produced using the three primary colors, red, green, and blue determined by the Bayer filter.

These are known as color channels. The tonal value associated with each color is determined by the signal strength.

JPEG files are usually 8-bit whereas RAW files are usually 12 to 16 bit. Some cameras have the ability to change their current bit rating through user defined settings.

On the Nikon D810 this is noted as “NEF (RAW) Recording” in the shooting menu.

Google your camera “brand-model” + “bit depth settings” for specific info on this setting.

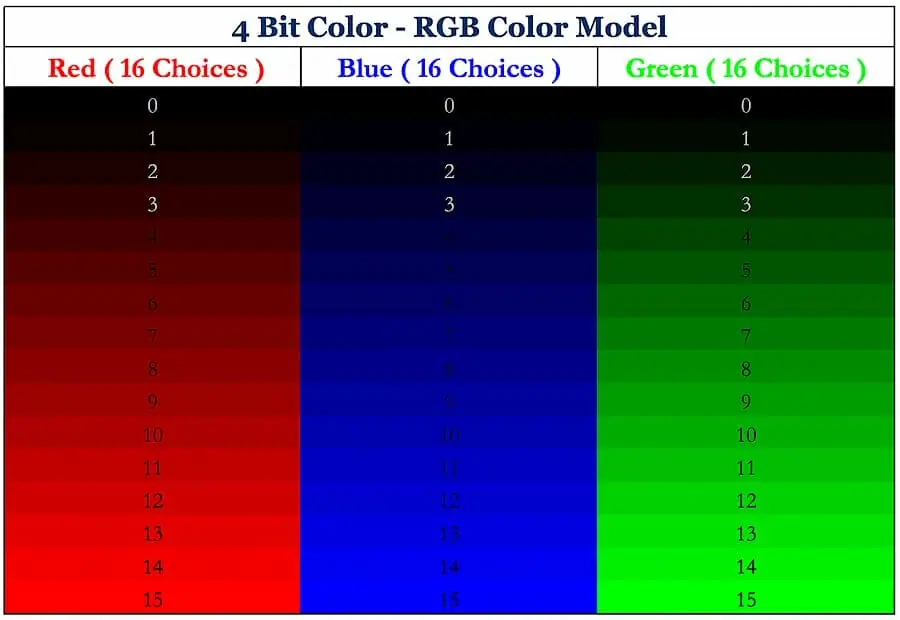

The example below shows the 4-bit color scale for RGB Primary colors red, green and blue. Bin 15 in each of the color channels is pure fully saturated color, also known as hue.

NOTE: Not all cameras process color the same way. The following example allows you to conceptualize this concept. It’s not meant to be technically accurate for a specific camera.

TECHNICAL NOTE: Although each of the color channels have the same amount of steps, the variation of green can still be seen all the way down to 1, where it’s hard to tell any difference in red at 1, and blue drops off at 2.

Humans perceive green as the brightest, red as the second brightest, and blue as the darkest, out of the three primary colors. This perception of color brightness, known as lightness or luminosity, is only a function of the eye’s physiology. Remember the Bayer Filter!

Calculation for 4 Bit RGB Color System

2^N-Bit = 2^4 = 16 color choices per primary color channel, as shown in the graphic above.

Each of the primary color channel bins can combine with each other to create new colors.

For example, Red ( 12 ), Blue ( 6 ), Green ( 15 ) would create unique color and Red ( 1 ), Blue ( 2 ), Green ( 4 ) would create another unique color.

When a bin is set to 0, such as Red ( 0 ) that color is turned off, in other words black.

When a bin is set to ( 15 ), it is on, producing pure color and full saturation, known as a hue.

Red ( 15 ), Blue ( 0 ), Green ( 15 ) = Yellow ( 15 ) or pure yellow.

Red ( 15 ), Blue ( 15 ), Green ( 15 ) = White, since the RGB Color Model is additive.

Red ( 0 ), Blue ( 0 ), Green ( 0 ) = Black, since all colors are turned off.

The total number of possible color choices, per pixel, for this small 4-bit system, is calculated as follows;

Total Color Choices Per Pixel = 16*16*16 or 16^3 which equals 4096 total choices. 16 represents the number of color choices per channel. There are 3 primary color channels.

Each pixel on the image sensor can communicate color using 1 of 4096 possible choices.

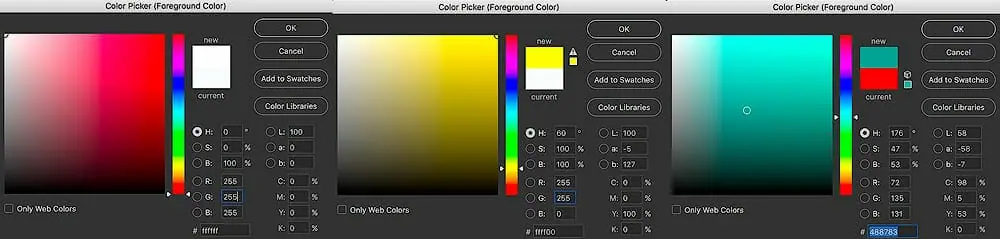

8 Bit JPEG File vs 14 Bit RAW File

Both files use the same three primary color channels, containing red, green and blue.

An 8-bit jpeg can record, 2^8th power or 256 possible outcomes for each of the 3 color channels.

The red channel can display 255 different variations of red, the green can display 255 variations of green and the blue, 255 variations of blue.

Variations of color = 255, not 256. Black isn’t on the primary color scale but is used to calculate overall color.

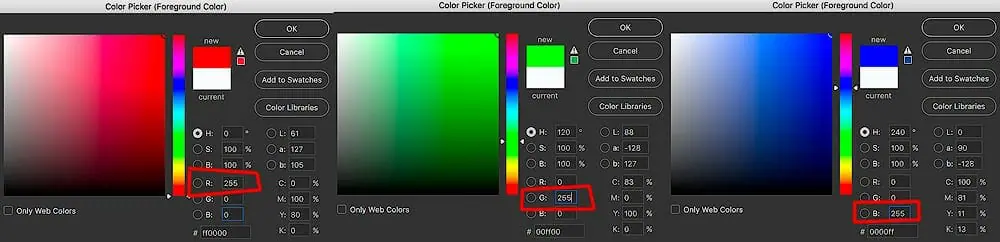

The Photoshop Color Picker displays 8 Bit Color. The following example shows:

- Pure Red, R(255), G(0), B(0)

- Pure Green, R(0), G(255), B(0)

- Pure Blue, R(0), G(0), B(255)

Each color channel has 256 possible outcomes or choices it can produce.

Therefore the number of possible choices, for each pixel, in a small 8-bit file is 256^3 power, or 256*256*256 which equals 16,777,216.

That’s almost 17 million different possible choices for every single pixel. There are millions of pixels on each sensor! This is a small JPEG file that the worst modern digital cameras can capture.

The graphic below shows:

- White, R(255), G(255), B(255) – Remember the RGB Color Model is additive.

- Yellow, R(255), G(255), B(0)

- And another random combination of color Cyan-Green, R(72), G(135), B(131)

The 14 Bit RAW File

The 14-bit file contains 2^14th power of possible variations for each of the 3 color channels. That’s 16,384 possible choices per color channel.

Since there are 3 color channels, red, green and blue, 16384^3 or 16,384*16,384*16,384 which equals 4,398,046,511,104.

That’s approximately 4.4 trillion different possible choices for each pixel. There are millions of pixels on each sensor.

We have surpassed the 2-year-old child that can barely speak, we have surpassed the adult with a vivid and detailed vocabulary, we have arrived at a degree of precision that only machines can record and communicate.

The human eye, the second most (known) complex object on the planet, after the brain, has no problem discerning approximately 12 million different colors.

In terms of color & tone, machines have bypassed the precision that the human eye, engineered by trial and error, through millions of years of evolution, can discern.

Camera Sensor Size – Overview

Along with the number of pixels, sensors are also rated in terms of physical sensor size or surface area. The sensor surface area also determines the size of each pixel.

Physical sensor sizes are provided in terms of width and height, usually in millimeters. A standard sensor size such as 36mm × 24 mm is known as a full frame 35mm format camera.

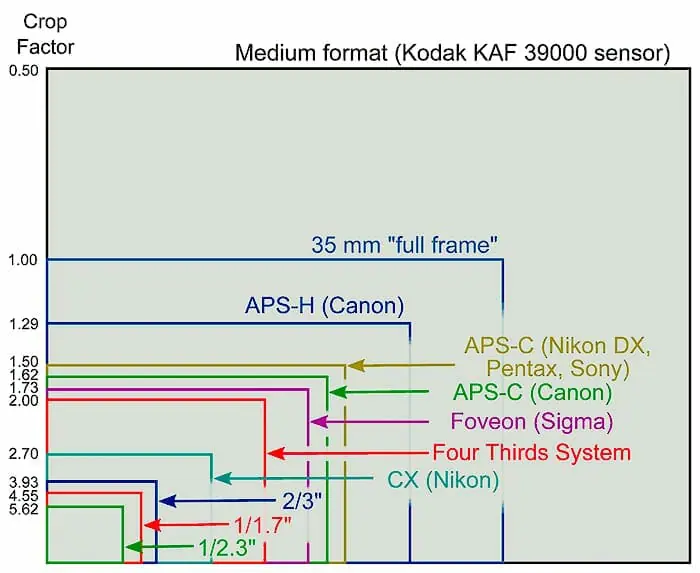

The following graphic shows a camera sensor size comparison for varying popular sensor formats.

Attribution: Moxfyre & Wikipedia

Larger sensor widths yield larger sensor surface areas providing more area for the capture of light information over a standard interval, known as exposure time.

Think of a sensor like the sail on a boat. The larger the sail, the greater the surface area, the more wind it will catch.

The larger the sensor, the greater the surface area, the more light ( photons ) it will catch.

Notice the massive difference in light collecting surface area between the APS-C vs full frame camera sensors. These cameras will produce much different overall image quality, with the larger far exceeding the smaller.

There is a much smaller difference between the APS-H vs APS-C. These cameras will produce close to the same image quality, with slight variations.

This is explained in detail in the following sections.

[mc4wp_form id=”3726″]Camera Sensor Crop Factor

The crop factor is a dimensionless reference number, associated with image sensors. It compares the diagonal distance across each specific camera sensor to the diagonal distance across the full frame camera sensor.

For diagonal distance think of a straight line from the top right corner to bottom left corner. This is also known as the hypotenuse.

Knowing the sensor width & height in millimeters, this distance is easily calculated using the Pythagorean Theorem.

Diagonal Distance = SQRT((Width^2)+(Height^2))

Where SQRT is the callout for Square Root.

If you don’t like math then Google, “Pythagorean theorem calculator”.

For example, the diagonal distance or hypotenuse of the 36mm by 24mm full frame can be found as follows: =SQRT((24^2)+(36^2)). The outcome is approximately 43.3mm.

Camera Crop Factor = 43.3 / Camera Sensor Diagonal Distance

A full frame camera would have a Crop Factor of 1, 43.3mm/43.3mm.

Smaller camera sensors such as a standard 22.3mm width, APS-C Sensor ( see graphic above ), would have a crop factor of approximately 1.6.

Quick Reference – Standard Camera Sensor Crop Factors:

- Full Frame Sensor Crop Factor = 1

- APS-H Sensor Crop Factor = 1.29

- APS-C Sensor Crop Factor = 1.5 to 1.6 depending on model.

- Foveon Sensor Crop Factor = 1.73

- Micro 4/3 Sensor Crop Factor = 2

Full Frame, Medium Format & Crop Camera Sensors

Digital cameras can be broken up into 3 different categories for sensor sizes, largest to smallest respectively, Medium Format, Full Frame, and Crop.

When making the following comparisons of image sensors, assume that each sensor compared is from the same fabrication year.

For example, although a crop sensor usually provides less quality & detail than a full frame sensor, a crop sensor from 2017 would most likely provide more quality and detail than a full frame sensor from the year 2000.

Types of Camera Sensors:

- Medium Format (Crop Factor > 1): Largest camera sensor size and usually highest cost. Medium format cameras are usually very bulky and heavy due to the large image sensor contained in the camera. They produce fantastic detail & color at the cost of weight & money.

- Full Frame (Crop Factor = 1): Standard for professional photographers & serious hobbyist. Provides fantastic image quality and dynamic range without the added bulk, weight, or cost of the medium format camera.

- Crop Sensor (Crop Factor < 1): Cheapest and smallest option. Smaller camera sensor size provides lower quality images with increased noise and less dynamic range compared to larger formats. For many photographers, the crop sensor camera is perfect for their specific skill level or use. They are not bad cameras, they just aren’t as good.

Camera Sensor Size – Why It Really Matters

A larger number of megapixels doesn’t always yield increased image quality.

There are mobile phones that capture 40-megapixel images which are not high quality.

The combination of the following, provide a reasonable estimate of a camera’s image quality. They are discussed in further detail below.

- Sensor size: Determines the light collecting surface area of the sensor.

- Sensor quality: The quality and age of the hardware used to fabricate the sensor. Newer hardware will yield better image quality, given everything else is constant.

- Underlying software quality: Algorithms and code running the camera’s operating systems & image processing. Newer software usually yields better image quality, given everything else is constant.

- Pixel width: Also known as pixel pitch. This is the width of each square pixel, which also determines its surface area.

- Megapixel count: How many pixels total are contained in the sensor.

- Bit-depth settings (see section above): How many colors and tonal values the sensor can capture and display in the final image.

Let’s Discuss…

For the following example, assume the latest pro model full frame camera from Nikon or Sony. The exact model does not matter.

Both of these companies produce the best image sensors currently on the market.

This does not mean that you need the latest & greatest camera to capture really high-quality images.

It only means that each generation of camera will get slightly better in the areas noted above, as software, hardware, and engineering improves.

A full frame camera sensor has a larger surface area to capture more light information over a standard amount of time.

This allows it to perform better in low light shooting scenarios than a crop sensor camera.

Having a larger sensor surface area also yields the ability to contain more pixels than a smaller crop sensor camera.

The more pixels a sensor contains, the more detail about the scene it can collect.

Remember, each pixel is a single color or tonal value.

For example, envision a photo, printed on a wall, that’s 3 feet or approximately 1 meter, wide.

It would be hard to tell what was going on in that photo if it was captured using a 10-pixel sensor.

There would only be 10 colors or tonal values used to portray the entire scene.

It would be very easy to decipher each exact detail in that photo if it was captured using a 40,000,000 pixel sensor.

Smaller pixel pitch (width), combined with larger sensor size, and the latest software & hardware, will produce the best image quality.

Now let’s discuss noise…

Picture Noise & Sensor Size

CMOS camera sensors and pixels inherently produce a small amount of noise. This is similar to radio static heard at low volumes in headphones. Even the best cameras with the optimal settings create small amounts of noise.

Noise is dependent of camera make and model as well as settings. Different types of noise makeup the overall noise profile for a given image.

As a sensor collects more light, producing a larger signal, less overall noise is seen in the final image. The Signal to Noise Ratio (SNR or S/N) is used to describe the phenomena.

In the graphic below, the pixel wells on the left have lower signal to noise ratios where the pixel wells moving towards the right have higher signal to noise ratios.

![]()

- Low signal to noise ratios exhibit a higher percentage of noise per overall signal produced, showing more overall noise in the image.

- High signal to noise ratios exhibit a lower percentage of noise per overall signal produced, showing less overall noise in the image.

The goal is to fill each pixel well to it’s corresponding tonal value maximum without clipping or losing data off the top end, thus increasing the Signal to Noise Ratio and image quality.

Images that contain larger proportions of dark tonal values will inherently have lower Signal to Noise Ratios revealing more visible noise. This is one reason low light and night sky images contain so much noise.

Images that contain larger proportions of lighter tonal values will have higher Signal to Noise Ratios revealing less visible noise.

Due to this fact, slightly overexposing images, known as Expose to the Right or ETTR , provides higher Signal to Noise Ratios and overall better image quality, provided that the brightest pixels are not “clipped” or “blown out”.

I show this concept in the 3rd video at the top of the page.

In some shooting scenarios such as star, Milky Way & night sky photography, the light levels are so low that the image noise will be very high. Even the best camera sensor for low light, such as models made by Sony, still produce some noise.

Using simple Noise Reduction Techniques it’s very easy to combat this problem in Photoshop.

Dynamic Range, ISO & Sensor Size

The following video supplements this section and discusses the effect of ISO on dynamic range and image quality.

Dynamic Range is defined as the difference or range between the strongest undistorted signal (brightest tonal value) & the weakest undistorted signal (darkest tonal value) captured by an image sensor, in a single photo.

The larger the dynamic range the larger the range of tonal values and colors each image can capture and display.

For example, a camera with high dynamic range capability could shoot directly into the bright sunlight & still collect information from dark shadow regions, without producing much noise. This is shown in the video above.

Larger physical sensor sizes combined with larger megapixel counts provide increased camera performance, with less noise, especially in low-light shooting situations.

The aperture diameter & shutter speed control how much light is captured by each pixel, thus increasing or decreasing the signal strength.

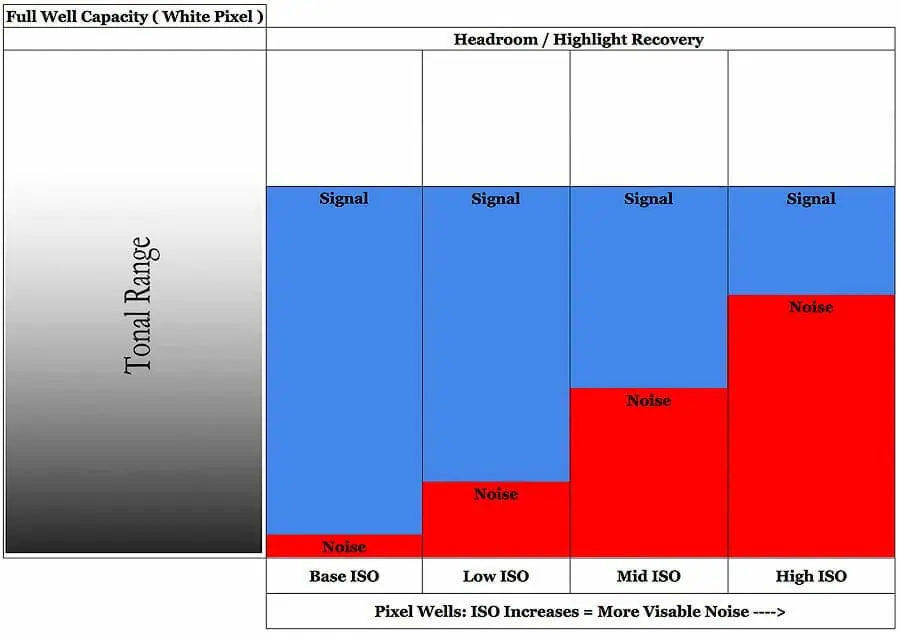

The ISO determines the amplification of the signal & inherent noise. The ISO also determines how much light is required for optimal exposure.

Higher ISO Values = Less Scene Light Required = Smaller S/N Ratio = Smaller Dynamic Range = More Image Noise

Lower ISO Values = More Scene Light Required = Higher S/N Ratio = Larger Dynamic Range = Less Image Noise.

In the graphic above the ISO is increased, which amplifies the baseline inherent noise seen in the Base ISO Column.

As the ISO becomes larger less overall light (signal) is required to produce the same tonal value. As the ISO increases the noise levels are amplified creating more overall noise in the image.

As ISO increases the amount of undistorted signal which reflects the dynamic range, also decreases.

No matter the camera, higher ISO values will always produce more overall noise and less overall dynamic range in the final RAW file.

Unlike megapixel counts, having a larger dynamic range is always a positive camera attribute. Dynamic range is provided in stops, which is a measure of light. For each stop increase the amount of light information collected doubles.

Sony currently produces some of the highest dynamic range sensors on the market for full frame cameras. These camera sensors are rated at approximately 14.8 stops. Many Nikon cameras use Sony sensors due to this fact.

These new sensors also produce an extremely low amount of noise at very high ISO values such as 5000 or 6400.

Canon continues to produce their own sensors which significantly lack in dynamic range, comparatively, rated at approximately 11.8 stops for their top model cameras. They also produce a much larger amount of noise at high ISO values.

This is a scientific fact, there is no dispute. Sony makes better sensors than Canon for landscape and outdoor photography.

Camera & Sensor Recommendations

Each photographer has different sensor size requirements to produce the images they desire. I’m not going to tell you what camera to buy, but will provide some of my personal favorites.

By gaining an understanding of how camera sensors actually work, and experimenting on your own, is the best way to figure out which camera and sensor size best fits your needs.

I am a landscape & outdoor photographer. I don’t shoot weddings, for clients or do product work. Therefore I can’t recommend cameras that I haven’t personally tested.

That being said, I’m happy to recommend a few different camera models for landscape & nature photographers. These may not be specific to you, but they can help in cutting down decision fatigue. They may also work for other niches of photography, but I can’t guarantee it:)

You can also visit the What’s in My Camera Bag & Night Photography Cameras & Lens Recommendation pages on this site.

PRO TIP: If you really enjoy photography buy the best camera you can afford. Then you don’t have to upgrade multiple times over the coming years. It saves money in the end. I know from experience…

I think Sony makes great low-end models with great sensors. Their high end models have fantastic sensors but are plastic and cheaply made. I prefer Nikon at the high end, with metal bodies, and the same Sony sensors. This is my personal preference.

Here are some camera recommendations, least expensive to most expensive, crop to full frame.

- Sony a5100

- Sony Alpha a6300

- Nikon D610

- Nikon D750 – Fantastic camera, especially for the cost. I highly recommend this camera to anyone that wants a full frame body without the cost of the D810. It’s not quite as good, but it’s close.

- Sony A7R – Great sensor and image quality. A fantastic lightweight travel camera, if you don’t expect to beat it up too much. I think these bodies feel cheap and easily damaged. I don’t trust them for backpacking and mountaineering use.

- Sony A7RII – Updated version of model above. Same thoughts…

- Nikon D800 ( My Backup Camera ) – I feel the same about this camera as the D810, below. The dynamic range isn’t quite as good, but still all around a great camera. I don’t carry this backup for backpacking / traveling. This is a backup camera for long distance photo trips. This used to be my main camera and has worked perfectly for the past 6 years.

- Nikon D810 ( My Main Camera ) – Great dynamic range and low noise at high ISO. Tough metal body perfect for mountaineering and backpacking. I highly recommend this camera to all landscape photographers who expect their gear to perform at the top level and take a beating at the same time.

How to 10X Your Learning Speed

The best way to improve quickly is by learning firsthand from someone that’s optimized their skills, over a decade or more through trial and error.

You can’t read blogs and watch internet videos to do this.

I offer workshops & tours for all skill, fitness, and age levels.

Over a 3-day weekend, I can teach you everything I know, plus provide 1 on 1 feedback that will quickly improve your skills.

I’ve seen students learn more in a 3-day trip than they have in 20 years of trying to learn on their own.

Check out my workshops & tours, right here.